Commercial Pain Points: Regression Errors

| Published: | Friday, September 5, 2025 |

| Author: | Daniel Patterson |

Vendor Negligence and the Illusion of Testing

Commercial software vendors often tout their investment in automated testing as proof of quality. But in practice, these test suites are little more than superficial smoke-tests. At their core are the so-called unit tests, which focus narrowly on isolated functions at the atomic level, verifying, for example, that (2 + 2 = 4). While this has value, it is also highly misleading. Real-world software doesn't operate in single-function silos. To the contrary, it operates as a complex web of loosely connected interdependencies.

Regression errors thrive in these gaps. A function might behave correctly in isolation, but when chained with five or ten others, subtle interactions emerge. These interactions can ripple across entire systems, sometimes breaking mission-critical workflows or disrupting end-user lives in ways that no unit test could ever anticipate. The sheer combinatorial complexity of such scenarios makes them virtually infinite to cover with automation. Automated tests may catch the tiny obvious crumbs of misstep, but they can't anticipate the unpredictable.

The Missing Gorilla Test

In the era of mechanical engineering, products were subjected to brutal physical tests. In the classic Samsonite Luggage commercial, where a gorilla slammed suitcases against walls, you could find a metaphor for what is missing in software. Today, instead of deliberate stress-testing under real-world conditions, software is most often pushed through a thin regimen of checkboxes and assertions, then declared stable.

The deeper problem is that the people writing the software are not often its actual users. Enterprise developers building payroll systems don't run payroll themselves, and engineers coding medical record software usually don't work in hospitals. This detachment creates a blind spot in empathy and practical validation. Without any applicable domain knowledge, engineers can't even imagine the creative gorilla scenarios that real users encounter daily.

The result is predictable, too. Products don't undergo any meaningful real-world testing until after they are shipped. Customers find themselves playing the role of unwilling beta testers, discovering flaws that should have been found and eliminated before release.

Users as Involuntary Beta Testers

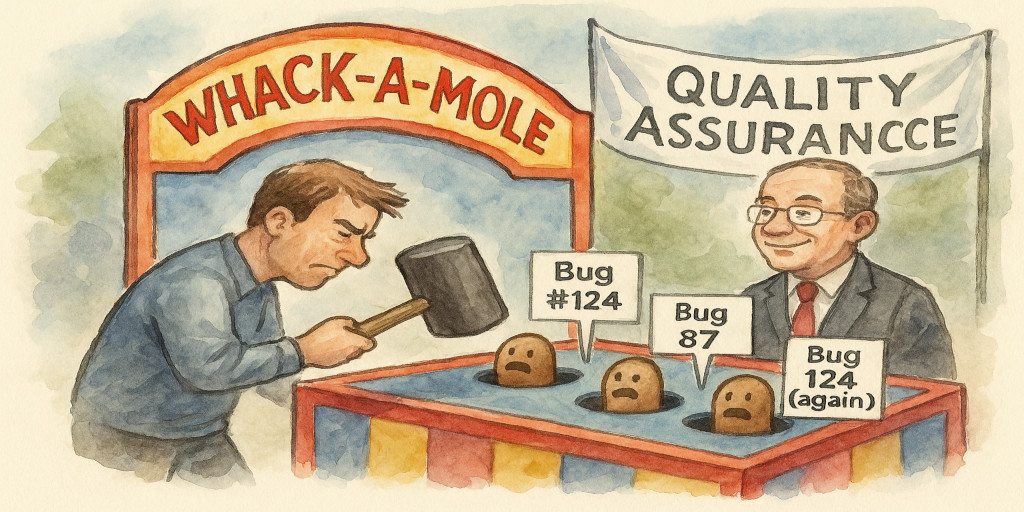

This is not a matter of accident but one of vendor negligence. Commercial producers know their automated test grids can't replicate reality, but they choose speed and cost efficiency over thorough validation. They rely on customers to catch failures, and patches become a cycle of fix-break-repeat.

From the customer's perspective, this is unacceptable. Buying software is not an invitation to participate in a never-ending experiment. Customers are justified in expecting each release to be better than the last, never worse, which is a standard that commercial vendors fail to address far too often.

The Cycle of Recurring Failures

What emerges from this pattern is not an occasional misstep, but a systemic flaw. When vendors neglect real-world testing, the result is a perpetual carousel of recurring failures across industries. In every segment of modern technology, reiterated bugs are appearing in finance systems, healthcare software, operating systems, and beyond. Each cycle erodes confidence, wastes resources, and leaves customers wondering whether they are truly buying a finished product or simply renting a perpetually unstable one.

The root causes aren't mysterious in any way. They are embedded in the way commercial software is built and maintained, such as in these examples.

- Codebase Complexity. Vast, legacy systems are brittle. One fix

for a small bug may ripple unpredictably, resurrecting older problems elsewhere.

- Short Release Cycles. Modern agile pipelines emphasize shipping quickly, leaving little room for comprehensive regression testing.

- Poor Configuration Management. Updates frequently overwrite or reset user-defined settings, undoing hours of customization and creating the real-enough illusion of regressions even when code hasn't changed.

- Commercial Incentives. Vendors optimize for new features, market timelines, and revenue streams, at the expense of stability. Thorough testing slows releases, so it is quietly sidelined.

- Customer Customization. Enterprise clients rarely run software out-of-the-box. Patches and upgrades often break custom integrations, forcing customers to reapply workarounds and making regressions feel inevitable.

Taken together, these factors reveal that regression errors are not random or accidental. They are the predictable byproduct of a vendor culture that prioritizes speed, profit, and superficial testing over genuine stability. This is why the same issues resurface again and again, across different platforms and industries, and why users are right to feel frustrated, or even betrayed, when they discover that a bug they thought was gone for good has simply been waiting to return.

The Open-Source Counterpoint: Testing Through Use

Contrast this with testing and verification in the open-source community. While open-source projects also use unit tests for quick validation, the real strength lies elsewhere, in continuous human interaction and lived use.

- Developers as Users. Many open-source maintainers rely on their own software in daily workflows. They use alpha and beta builds for their own productivity, entertainment, or infrastructure. Bugs that would never appear in a lab setting are caught immediately because the developers themselves feel the pain.

- Community as Consenting Beta Testers. Although long-term stable versions are typically available for those users who don't have time to participate in testing procedures, many open-source users understand the development model and willingly participate as testers in their own daily lives. Their feedback loops are fast, transparent, and constructive, because they've opted into that role. Unlike commercial customers, they aren't blindsided into doing QA for a paid product that should already be perfect.

- Slow, Iterative Refinement. Open-source projects often evolve gradually. They are rarely rushed to meet arbitrary release cycles. This organic pace allows testing, both automated and human, to act as layers of refinement, pushing software closer to real-world reliability over time.

- Real-World Context. Since open-source contributors come from the same industries and use cases as the software's general audience, they bring authentic scenarios into testing. Whether it's a developer debugging Linux on their workstation or a sysadmin stress-testing an Apache server, the result is a robust practical resilience that automated unit tests alone could never produce.

Toward a Higher Standard

Regression errors highlight the difference between superficial testing and meaningful validation. Commercial vendors, hiding behind automation, treat customers as expendable testers. Open-source communities, conversely, demonstrate that genuine reliability emerges only when humans use, stress, and live with the software themselves.

If customers demand more, not just in bug fixes, but in assurance that past problems won't ever return once resolved, then producers will be forced to abandon the illusion of testing grids and adopt practices that mirror the following ethos that has been widely adopted in the open-source community.

werMake

werMake